Solving the Achilles Heel of Audit Security

- Date: Feb 29, 2024

- Read time: 10 minutes

The limitations of traditional file system security is hindering innovation in cyberstorage.

Background

Auditing (who did what and when) is the cornerstone of regulatory compliance. All major vertical standards (HIPAA, PCI, FedRamp, NIST) include requirements for the auditing of users and data on any system that stores or processes data. Audit data is also leveraged in cyberstorage security solutions that protect customer file and object data from cyber threats. But to date, no industry-wide standard format or protocol exists across to help ensure that 3rd party vendors can easily integrate. In this post, we’ll review the landscape of auditing in large, scale-out file systems used to run modern workloads, along with the issues around building resilient, high-performance security solutions on a modern audit security framework.

The Audit Protocol Landscape

Many NAS vendors that implemented auditing, did so many years ago and the only common option was SYSLOG and many different proprietary interfaces. Some of the legacy protocols used by NAS vendors include:

- SYSLOG

- SNMP

- Log files CSV-delimited

- Log file with XML field definitions

- Proprietary socket protocol with external software middleware that exposes proprietary APIs

- REST API over HTTPS

Looking at this list, we see SNMP and SYSLOG, both of which are very old protocols with major shortcomings when used for security use cases. Although encrypted SYSLOG and SNMP implementations are possible, they’re rarely used and are complicated to configure so these protocols are essentially ‘clear text’ most of the time. Log files are typically read over SMB or NFS protocols which have encryption options that are rarely enabled. In addition, NFS encryption capabilities are extremely complicated to configure. Given that, these protocols are not a great foundation for building robust, secure auditing solutions.

But what about REST API over HTTPS? While it might appear to be a reasonable option, the reality is that it’s not a good choice either, as the overhead to process events with polling or pushes (webhooks) of audit log data doesn’t scale well, In addition, it suffers from HA design complexities with external load balancing. It also creates the need for event queues in the storage device and inside the security application to persist events before they are processed, adding even more complexity, This makes it inefficient for publishing once and allowing consumption from many different applications that require the audit security event stream. And if you’re dealing with more than a single consumer, the HTTP data is duplicated across each consumer.

Let’s look at a typical Scale-Out NAS device architecture to explore the best functional requirements for providing a scalable, secure audit framework.

Auditing (who did what and when) is the cornerstone of regulatory compliance… But to date, no industry-wide standard format or protocol exists across to help ensure that 3rd party vendors can easily integrate.

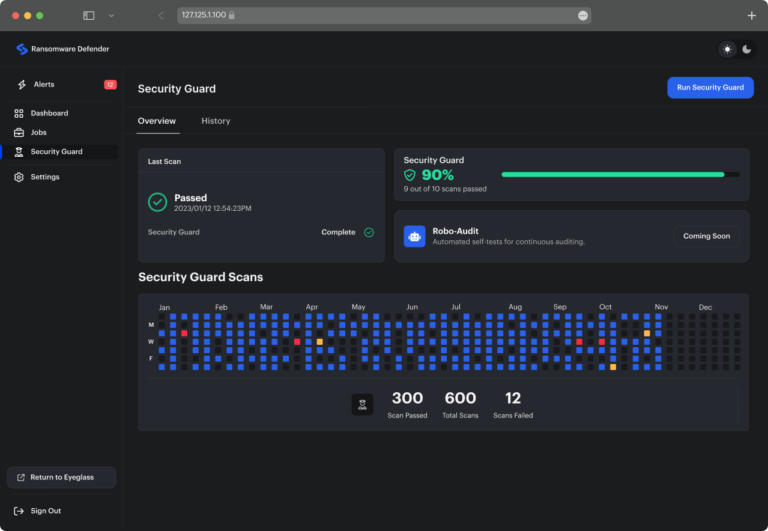

In the diagram above, NAS cluster nodes have local logs that store file system activity. In the middle, the audit ingestion function within security software can have HA ingestion capabilities and scale-out for performance requirements.

Finally, at the bottom we have the application logic for analyzing and processing the audit data for real time processing or indexing to store in a database for historical analysis. These two functions consume the event stream but each has different processing latencies and will consume audit data at different rates.

When looking at the functional blocks in the diagram, it’s clear that failures of a storage node or application functional block is likely and a solution is required to ensure continuous processing of audit data across all failure scenarios.

Which functional requirements would address the shortcomings of legacy audit protocols?

Let’s look at the requirements needed to build a robust, secure and HA/Scalable audit framework.

- Inflight encryption between NAS and Security applications

- High availability option to handle node failures on the NAS cluster or ingestion and processing by the security application.

- Instant restart. The ability to checkpoint forwarding or processing of audit data to allow another node to “pick up where the previous node left off”

- Delivery guarantees from Clustered NAS → Ingestion → Processing. Auto detect missed audit messages and retrieve all missing events.

- Scale-out processing. The design to scale as IO rates or user event rates scales. This should be a dynamic process to add ingestion or processing nodes to scale linearly.

What are key characteristics of the audit data itself?

- Time series data that includes time stamps with order dependency across cluster NAS nodes.

- Delimited entries have common values across all vendors but no common schema. Timestamp of an event is the common field across all nodes in the NAS cluster, helping to ensure order dependency for the audit data.

- Examples of common fields across vendor audit logs include Date and Time Stamp; User ID; User SID; UID; Action Performed; File Name; Path to File; and Occurrence Count. Event-specific metadata examples include Bytes Read or Written; and Cluster Node that Processed the Event.

How do current protocols stack up?

The most common protocol used to send audit data to 3rd party applications is SYSLOG. Let’s see how SYSLOG aligns to the key functional requirements below:

- Inflight Encryption (SYSLOG score Fail). It is possible to implement SYSLOG encryption; however it’s rarely implemented and is not the OS default. It requires x.509 certificates on both sender and receiver.

- High availability (SYSLOG score score Fail). This requirement would need SYSLOG load-balancing across physical or logical hosts. This is not available as an out-of-box feature of devices. It also requires an agreed-upon load balancing method to help ensure that time series data is processed in the order that it occurred on the device, based on timestamp with microsecond granularity.

How should we address the Achilles heel of Audit Security?

Without a standardized audit data schema and a robust event publishing API, integrations with security products will continue to be the weak link. What that means is that the “good guys” are fighting with one hand tied behind their backs. Storage device integrations take longer and provide less security, lacking the HA and scalability capabilities required to create a modern framework.

The implementation illustrated below is intended to provide a blueprint for security and storage device vendors to consider adopting a modern audit security framework to address all the shortcomings of the current solutions.

Cyberstorage blueprint for a resilient, secure, and scalable auditing framework

The solution to streaming Audit Events between storage devices and security applications should be based on Apache Kafka. Let’s review the key architectural points of why this event streaming platform is well-suited for security event processing. The diagram above shows the simplified interconnect between publishers and consumers.

The Kafka event streaming platform addresses several key requirements needed to build next generation security solutions. This paper is not a detailed explanation of how Kafka works, but it will focus on highlighting why it is well suited to build robust security applications.

- Payload independent. Kafka messages can be structured (e.g. JSON) or binary, so it’s possible to transmit any type of data in messages between storage devices (publishers) and (consumers) security applications.

- Concurrent high speed or low speed message consumption. This allows consumers (security applications) that implement different logic for different functions to consume the same source audit data at different rates. Consider real-time event processing for malicious behavior analysis and a second function to index, compress and store audit data in a database for historical analysis. These two functions will process audit events at very different rates. An event streaming platform solution that can publish once and consume events at different rates required by the function: real time, near real time or slow processing scenarios can all be handled. Kafka meets this requirement with consumer groups that pool similar consummation logic into a group that allows different rates of processing from an event stream published by storage devices. Many other aspects of the consumer group behaviors can be tuned.

- Guaranteed delivery and processing semantics. To avoid losing audit messages the publisher needs acknowledgement of batches of events that are published to the event streaming platform. Consumers need to know they have all the audit messages that were published. Kafka helps to solve this by using event stream offsets to track which messages have been published and which have been acknowledged. This allows the consumer to detect missing events and reprocess them as necessary. This also allows a user to restart and pick-up the process from where it left off.

- Store and Forward or cut through processing. Since Kafka allows different consumption rates, real-time processing does not feel the impact of slower processing logic within the application. In addition, the scaling of nodes can be different depending on the performance objectives of each function. The ability to persistently store published messages allows a time window of stored audit data to allow a process that needs to go back in time to reprocess or analyze historical event data without requiring a separate database.

- Continue where you left off. This enables the concept of “checkpointing”. A checkpoint allows a process to always know the last processed event and allows nodes to shutdown, reboot or fail and guarantees that “Process Once” semantics will pick up exactly where it left off before the restart. This removes the need for security applications or storage devices to handle missing, or unprocessed event data created during an outage or reboot/restart.

- Hitless Scale-Out Processing. The consumer group concept allows even distribution of audit data into sub-consumer group constructs that enables scale out processing. This design allows new processing nodes to join the security application and pick up processing workload without impacting existing processing logic. In dynamic environments, a burst of events can be met with additional processing nodes and then remove the nodes after the event burst has been processed. This also removes the need for security applications and storage devices needing complex logic to scale up and down. SaaS and high performance use cases will benefit from this dynamic resource scaling.

- High Availability. Kafka allows processing nodes to fail, join/leave, reboot, or shut down, without any logic needed within storage devices and security applications. Kafka’s design allows both publishers and consumers to ride over process failures and dynamic scaling without needing to write logic to handle all the failure scenarios. Contrast this with legacy protocols that require significant custom application code to achieve the same capabilities or external load balancing to achieve the same level of availability.

- Carrier Grade upgrades. The event streaming platform holds events and tracks processing progress. This enables application code to be upgraded without stopping publishers (storage devices) and consumers (security application nodes). A no-down-time upgrade capability requires events to continuously flow into the system as software is upgraded and nodes reboot. This is a critical requirement to avoid missing audit data or detections due to software upgrades.

Summary

An industry solution for simplifying the process of collecting audit data from storage devices is essential for accelerating innovation and improving device coverage by security applications. This will require a modern event-streaming solution that can build on a solid foundation and reduce time spent integrating with proprietary implementations.

How does Superna Technology stack up? Our entire product architecture is based on Kafka for both audit data ingestion and processing logic, providing us with the ideal foundation for a robust, scalable auditing protocol.

Prevention is the new recovery

For more than a decade, Superna has provided innovation and leadership in data security and cyberstorage solutions for unstructured data, both on-premise and in the hybrid cloud. Superna solutions are utilized by thousands of organizations globally, helping them to close the data security gap by providing automated, next-generation cyber defense at the data layer. Superna is recognized by Gartner as a solution provider in the cyberstorage category. Superna… because prevention is the new recovery!

Featured Resources

Mastering Cybersecurity Insurance Negotiations: A Comprehensive Guide

Navigating the Digital Menace: A Beginner’s Guide to Ransomware