A new industrial revolution is being powered by Generative AI

- Date: Mar 21, 2024

- Read time: 10 minutes

The future is Generative AI… and securing that future requires a proactive approach that combines defensive and offensive security measures.

Overview

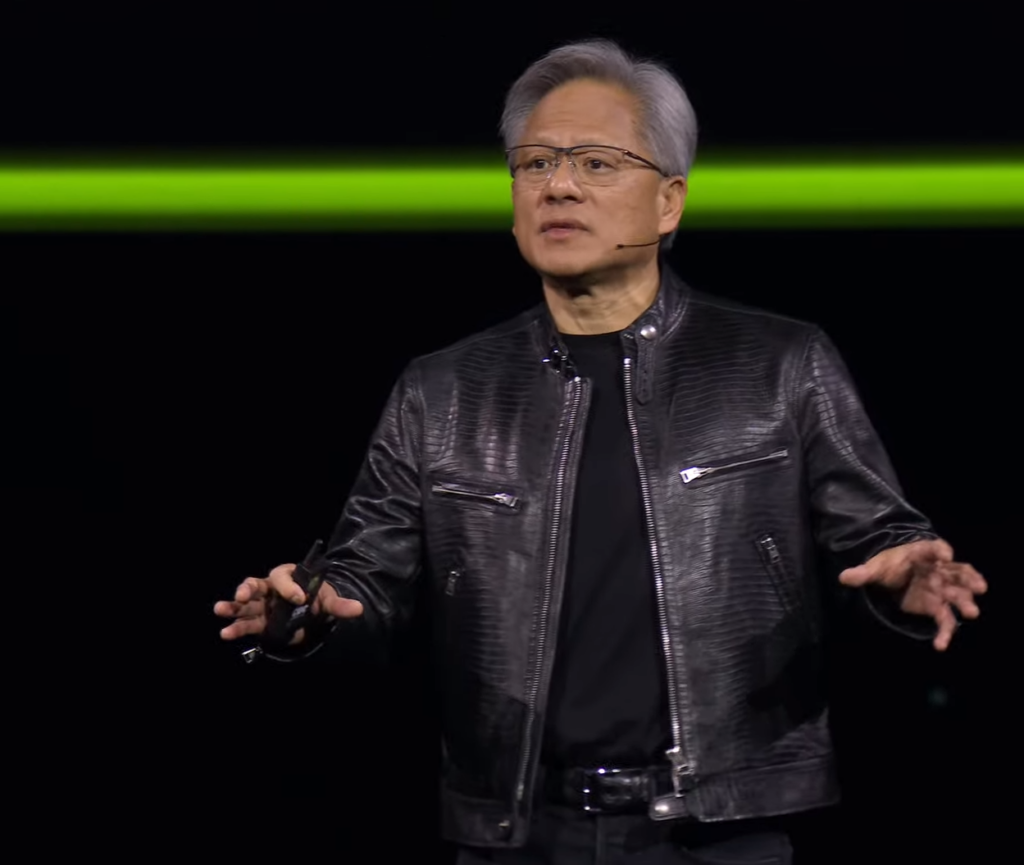

Nvidia’s 2024 GPU Technology Conference (GTC) — going on now in San Jose, California — is their premier event for AI innovators, developers and enthusiasts, and Nvidia CEO Jensen Huang kicked-off its keynote with a flurry of announcements around AI chip, products, and partnership. One of the most important announcements was around their Blackwell computing platform, designed specifically to accommodate the vastly increased processing power required by generative AI and related technologies.

Named for David Harold Blackwell — a UC Berkeley mathematician specializing in game theory and statistics and the first Black scholar inducted into the National Academy of Sciences — the new architecture succeeds NVIDIA’s Hopper architecture, launched just two years ago. Blackwell will run as much as 30 times as fast as Hopper. What’s most interesting is that not only does it greatly increase the speed of processing, but it also does so while requiring significantly less energy.

“The amount of energy we save, the amount of networking bandwidth we save, the amount of wasted time we save, will be tremendous,” said Huang. “The future is generative… which is why this is a brand-new industry. The way we compute is fundamentally different. We created a processor for the generative AI era.”

Nvidia also announced a new product called NIM (Nvidia Inference Microservices), essentially a “container” of all the software an enterprise might need in order to put AI models to work. It includes APIs to popular foundation models, along with the software required to deploy open-source models, as well as links to popular business software applications. It’s a way to pre-package the major components and simplify some of the deep technical stuff to make it friendlier to the non-PhD user, all tightly integrated to optimize their usefulness. In this way, Nvidia is looking to strategically distance themselves from such challengers as Intel, AMD, Cerberus, and others. Nvidia is no longer content with being just a supplier of chips. The game has gotten much bigger now, and becoming a platform player is a significant step in that direction.

A new industrial revolution… one that’s based on Generative AI

It’s impossible to overstate just how enormous the impact of generative AI will be on our world. Everything will be impacted: from creative and content creation, to healthcare, to education, to manufacturing, to entertainment, to business and finance, and pretty much everything else you can imagine. In healthcare alone, its capabilities are already being leveraged to generate synthetic data, assist in medical image analysis, drug discovery, personalized medicine, medical training, and even robotic surgery. Some examples:

- Medical Image Analysis: Generative AI models, such as Generative Adversarial Networks (GANs), are used to generate synthetic medical images that closely resemble real patient data. These synthetic images can be used to augment limited datasets for training AI medical imaging models, and improving accuracy and performance in tasks like MRI and CT scan analysis, tumor detection, and organ segmentation.

- Drug Discovery and Development: Generative AI is used in drug discovery to generate novel molecular structures and compounds with unique, desired properties. AI models can simulate molecular interactions, predict drug-target interactions, and design new drug candidates, reducing costs and accelerating the drug discovery process.

- Personalized Medicine: By analyzing patient data – including genomics, medical records, and imaging data – we’ll be better able to generate personalized treatment plans and therapies. AI models can predict patient outcomes, identify genetic markers for diseases, and recommend tailored interventions based on individual patient characteristics.

- Medical Simulation and Training: Generative AI is being used to create realistic medical simulations and virtual patient models for medical training and education. These simulations allow healthcare professionals to practice procedures, diagnose medical conditions, and improve clinical skills in a safe and controlled environment.

- Natural Language Processing (NLP) models, powered by generative AI, analyze unstructured medical data – such as clinical notes, research papers, and patient records – to extract insights, automate documentation, assist in medical coding, and support clinical decision-making by providing relevant information to healthcare providers.

- Healthcare Chatbots and Virtual Assistants: AI powered healthcare chatbots and virtual assistants will interact with patients, answer medical queries, schedule appointments, provide medication reminders, and offer personalized health recommendations. This will improve patient engagement, support remote healthcare delivery, and enhance the overall patient experience.

- Medical Research and Innovation: By analyzing large-scale healthcare datasets, identifying patterns, discovering new associations, and generating hypotheses for further investigation, AI-driven insights will contribute to advancements in disease understanding, development of treatment, and delivery of healthcare, both in-person and remote.

Digital twins and their use in simulating the real world for analysis and planning

And it doesn’t stop there. One segment of Huang’s Keynote focused on the expanded use of Digital Twins, a virtual representation or digital counterpart of a physical object, process, system, or entity. It encompasses both the physical aspects (such as geometry, structure, and behavior) and the digital data and information associated with the object or system. Digital twins are already in use across several industries and applications to simulate, monitor, analyze, and optimize real-world entities and processes. Some examples:

- Product Design and Development: In manufacturing and engineering, digital twins are used to create virtual prototypes of products and components. Engineers are able to simulate the behavior, performance, and interactions of these virtual prototypes before physical manufacturing, enabling rapid iteration, design optimization, and cost savings.

- Asset Management: Digital twins are used for asset management and maintenance in industries like aerospace, automotive, and energy. By creating digital twins of physical assets such as aircraft engines, vehicles, and machinery, organizations can monitor performance, detect anomalies, predict failures, and schedule maintenance proactively, improving reliability and uptime.

- Smart Cities and Infrastructure: In smart city initiatives, digital twins are used to model and manage urban infrastructure such as buildings, transportation systems, utilities, and public services. City planners and administrators use digital twins to optimize resource allocation, enhance infrastructure resilience, and improve urban sustainability and livability.

- Healthcare and Life Sciences: Digital twins can create personalized models of patients based on their medical data, genetics, and health history. This allows healthcare providers to simulate treatment outcomes, optimize therapies, and tailor interventions for individual patients, leading to improved outcomes.

- Supply Chain Optimization: Digital twins are used to model and simulate supply chain processes, logistics networks, and inventory management. Organizations can optimize supply chain operations, predict demand fluctuations, identify bottlenecks, and improve overall supply chain efficiency and resilience.

- Building and Facilities Management: Utilized in building and facilities management to monitor and control building systems, HVAC, energy usage, and occupant comfort. Digital twins can help facility managers to optimize building performance, reduce energy consumption, and ensure regulatory compliance.

- IoT and Industrial Internet of Things (IIoT): Digital twins are integrated with IoT sensors and data from connected devices in industrial settings. This allows real-time monitoring, analysis, and control of industrial processes, equipment, and production lines. This supports predictive maintenance, process optimization, and quality control.

- Environmental Modeling: Digital twins are used to model and simulate environmental systems such as weather patterns, water systems, and ecosystems. Environmental scientists and researchers can use digital twins to study climate change, predict natural disasters, and assess the impact of human activities on the environment.

As you can imagine, digital twins serve as powerful tools for simulation, monitoring, analysis, and optimization across various domains, enabling organizations to make better-informed decisions, improve efficiency, reduce costs, and drive innovation.

Staggering and unprecedented growth in the data outputs created by Generative AI and Large Language Models has accelerated demand for both speed and storage, but has also greatly increased the attack surface for the enterprise. Securing the inputs and outputs of Generative AI will require a more holistic, proactive approach to data security, and incorporate both defensive and offensive security measures.

Unprecedented growth of unstructured data

All of this points to staggering and unprecedented growth in the data outputs created by Generative AI and Large Language Models (LLMs). LLMs are a class of artificial intelligence model that are trained on vast amounts of textual data, in order to understand and generate human-like language. These models use deep learning techniques to process and generate text. Some well-known examples include:

- GPT (Generative Pre-trained Transformer) series developed by OpenAI, such as GPT-2, GPT-3, and later versions. These models are trained on a diverse range of textual data from the internet and can generate coherent and contextually-relevant text with ever-increasing degrees of accuracy.

- BERT (Bidirectional Encoder Representations from Transformers) developed by Google, which focuses on understanding the context of words in a sentence. While BERT is primarily used for tasks like natural language understanding (NLU) and sentiment analysis, it can also generate text.

- T5 (Text-to-Text Transfer Transformer) developed by Google, which is a versatile framework for training various NLP models. T5 can be fine-tuned for text generation tasks, making it capable of generating human-like text.

What does this mean for data security?

These technologies require massive amounts of data, and their output is… wait for it… yet more data. The proliferation of more data on-prem, in the cloud, and at the enterprise edge — along with the adoption of AI and generative AI workloads — creates not only the need for much more storage, but also greatly increases your attack surface. It’s not just the outputs of Generative AI that need securing, but also the inputs, such as the data on which you train your AI. In a recent post by Superna CTO Andrew MacKay — Protecting your data supply chain against emergent cyberthreats — he discusses the potential havoc that can be wreaked by bad actors corrupting training data, which could actually render your AI investment worthless.

To properly address this requires a multi-pronged approach, including the use of data classification tools to improve storage optimization; data lifecycle enforcement; security risk mitigation; and accelerated data workflow throughput. Gartner, in their 2024 Strategic Roadmap for Storage, encourages organizations to take extra precautions to protect against emergent data security threat events, breaches and ransomware by employing active cyberstorage defense capabilities that can robustly detect and thwart attacks in as near-real-time as possible.

A new data security paradigm for Generative AI

Securing Generative AI will require an approach that addresses all aspects of the data supply chain; from general safeguards and embedded security measures to overall transparency, investments in proactive defensive and offensive tools, and well-thought-out methodologies for incident response. The diagram below illustrates the concept and drills down a bit deeper.

Summary

Staggering and unprecedented growth in the data outputs created by Generative AI and Large Language Models has accelerated demand for speed and storage, but has also greatly increased the attack surface for the enterprise. Securing the inputs and outputs of Generative AI will require a more holistic, proactive approach to data security, and incorporate both defensive and offensive security measures.

Prevention is the new recovery

For more than a decade, Superna has provided innovation and leadership in data security and cyberstorage solutions for unstructured data, both on-premise and in the hybrid cloud. Our solutions are utilized by thousands of organizations globally, helping them to close the data security gap by providing automated, next-generation cyber defense at the data layer. Superna is recognized by Gartner as a solution provider in the cyberstorage category, and supports all major storage platforms, helping to ensure that your AI/ML initiatives can be protected from both existing and emergent cyberthreats.

Superna… because prevention is the new recovery!

Featured Resources

Mastering Cybersecurity Insurance Negotiations: A Comprehensive Guide

Navigating the Digital Menace: A Beginner’s Guide to Ransomware